April 21, 2024

AI as a Design and Planning Partner

By Matt Shaw

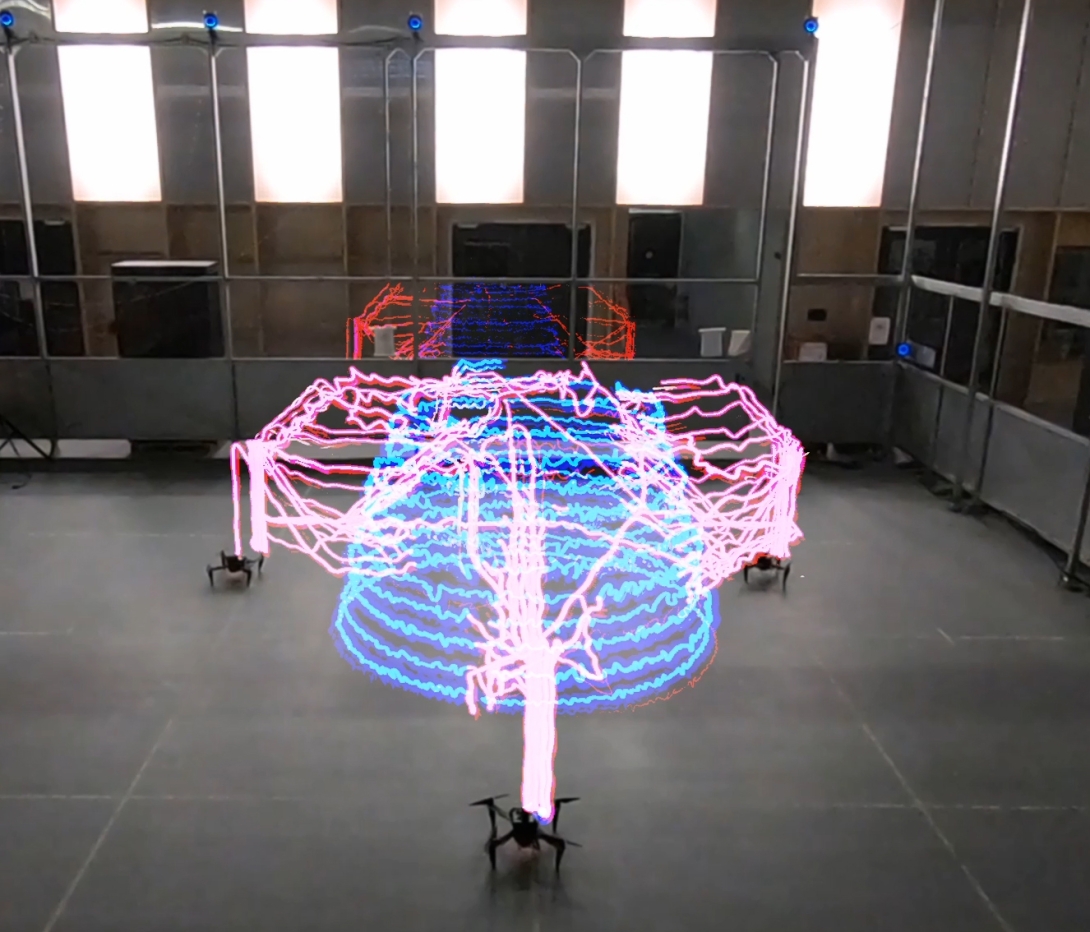

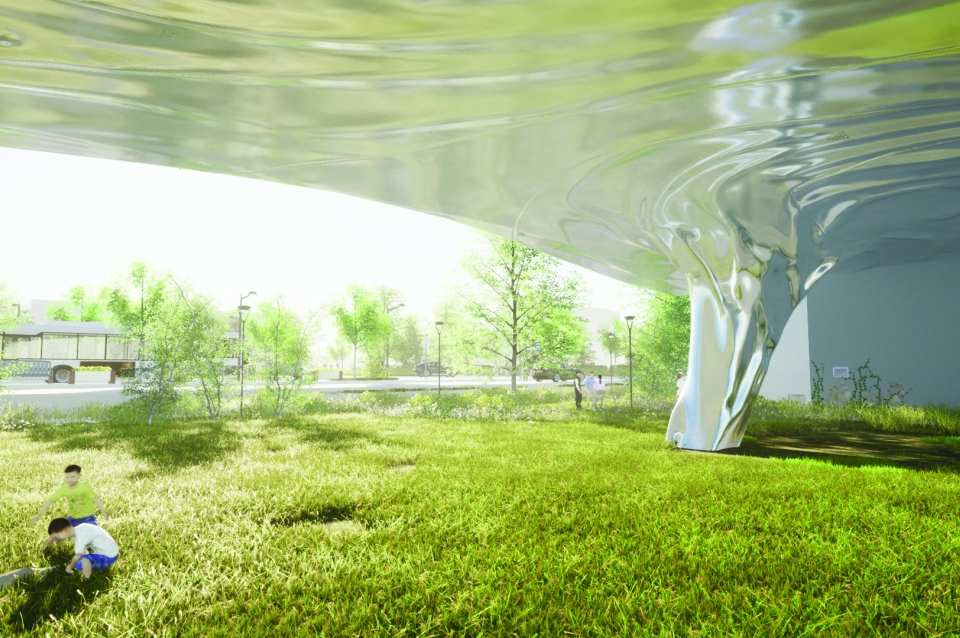

Weitzman’s Autonomous Manufacturing Lab used advanced computational methods to produce design concepts for a new building that would house Penn’s Center for Innovation & Precision Dentistry (CiPD), a hub for interdisciplinary research operated by the School of Dental Medicine and School of Engineering and Applied Science and the School of Dental Medicine. The project is a collaboration with Hyun (Michel) Koo of Penn Dental and Ellen Neises of PennPraxis.

Close

Weitzman’s Autonomous Manufacturing Lab used advanced computational methods to produce design concepts for a new building that would house Penn’s Center for Innovation & Precision Dentistry (CiPD), a hub for interdisciplinary research operated by the School of Dental Medicine and School of Engineering and Applied Science and the School of Dental Medicine. The project is a collaboration with Hyun (Michel) Koo of Penn Dental and Ellen Neises of PennPraxis.

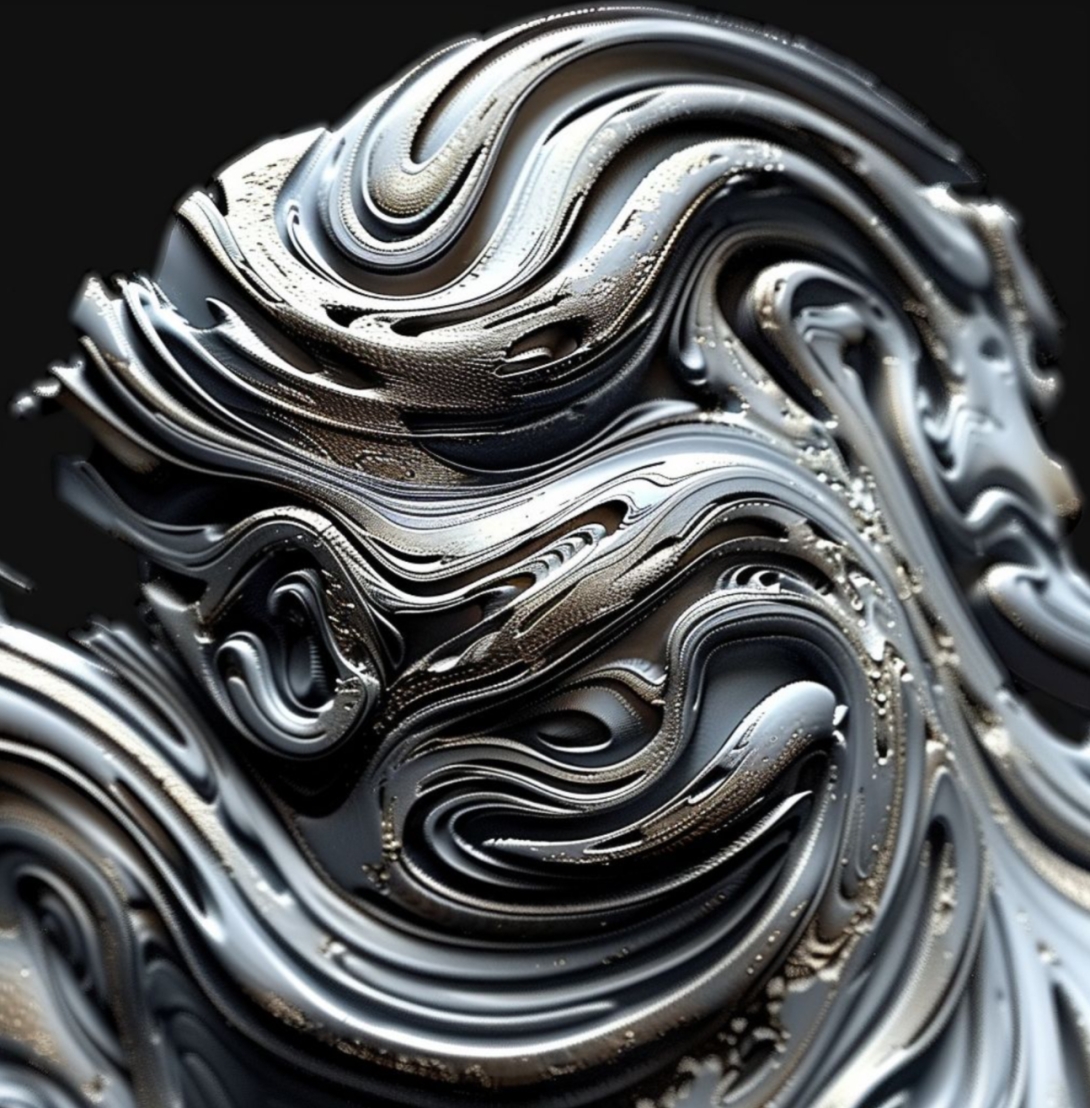

Master of Architecture student Kirah Cahill used generative AI to complete this still life for a third-year seminar with Ferda Kolatan, associate professor of architecture at Weitzman

Master of Architecture students Jenna Arndt and Daniel Lutze produced this gothic study in Kolatan’s seminar.

Picturesque Grotto was produced by Master of Architecture students Mitchell Coziahr and Danny Jarabek in Kolatan's seminar.

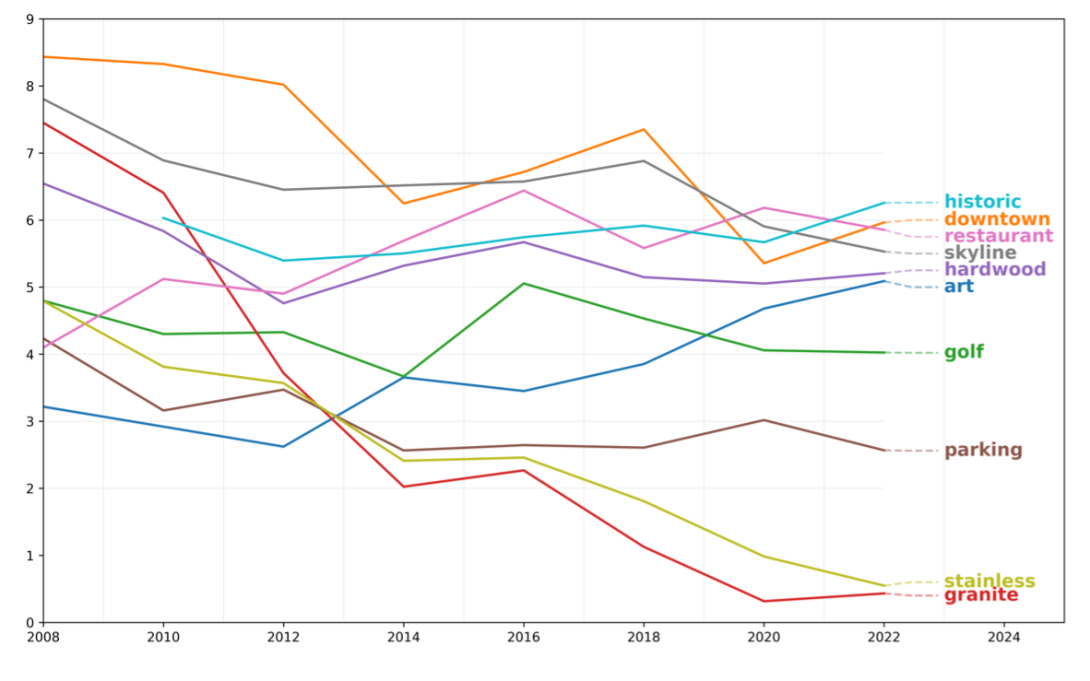

Charlotte, North Carolina's NoDa "arts district" is among the neighborhoods in 10 US cities that are experiencing a dramatic increase in the share of white residents. Elizabeth Delmelle, associate professor of city and regional planning and director of the MUSA Program, and her collaborator are using AI to analyze real estate listings.

View Slideshow

View Slideshow